Here we are, at week 2 of the SRTP Foundations course and according to the course syllabus we are starting to talk about responsible tech policy, specifically, developing a framework that we will use to examine tech policy. There is a set of pre-reads that seem to try to cover ideas around what responsible tech actually means, and discuss the different players in the Canadian landscape.

I wanted to focus this blog post on the principles around “responsible tech”. At this point I’ve read many such lists that try to capture all the altruistic things we should be considering when adopting, using, and building responsible tech. One of the recommended course materials was a link to the Government of Ontario website on Principles for Ethical Use of AI. If you don’t feel like going to read, here are the major headings.

- Transparent and explainable

- Good and fair

- Safe

- Accountable and responsible

- Human centric

- Sensible and appropriate

While I do like the idea behind many of these lists, I find most are seriously lacking and won’t lead to the results we are hoping for. Don’t get me wrong, the words that are being used in these lists are great, but we need much more if we are going get this right.

Hello, consistency?

If you read the list above from the Government of Ontario, what stands out to you? For me, it was …. does anything on this list only apply to responsible AI technology use? I would imagine that you could take this list and apply it almost anything (government or otherwise) and maybe that is exactly what we should be doing. For example, wouldn’t you want all government processes to be transparent and explainable? Wouldn’t you want all technology, regardless of AI use, to be good and fair?

Maybe instead of creating lists focused on responsible AI, we should probably move to creating principles we can all agree on to form the basis of our society. I can assure you that some of the people behind this document have Apple technology …. maybe if they actually cared about things being good and fair they wouldn’t purchase from a company that exploits labour all around the world to deliver their record profits.

The point is that if we really want to change the destiny for the AI augmented technology we are seeing, we probably need to start by changing the culture that this technology is designed for, and not just create aspirational rules and apply it only to this specific area.

Some are contradictory

A common theme among these principle lists are to include a bunch of altruistic words that sound great, and then give them really broad definitions that make them hard to understand and apply. One example to think about here is the potential contradiction between good and fair and human centric. All technology throughout history has had effects on human society, but not all humans were affected equally. In recent times, one could probably turn to how schools handled the recent pandemic. It was human centric to understand the public benefit that the internet and laptops brought to kids. But all kids? What about those without access to this technology? In fact, many school boards made decisions to not have classes as that would have unfairly treated some. Was that decision fair to the others?

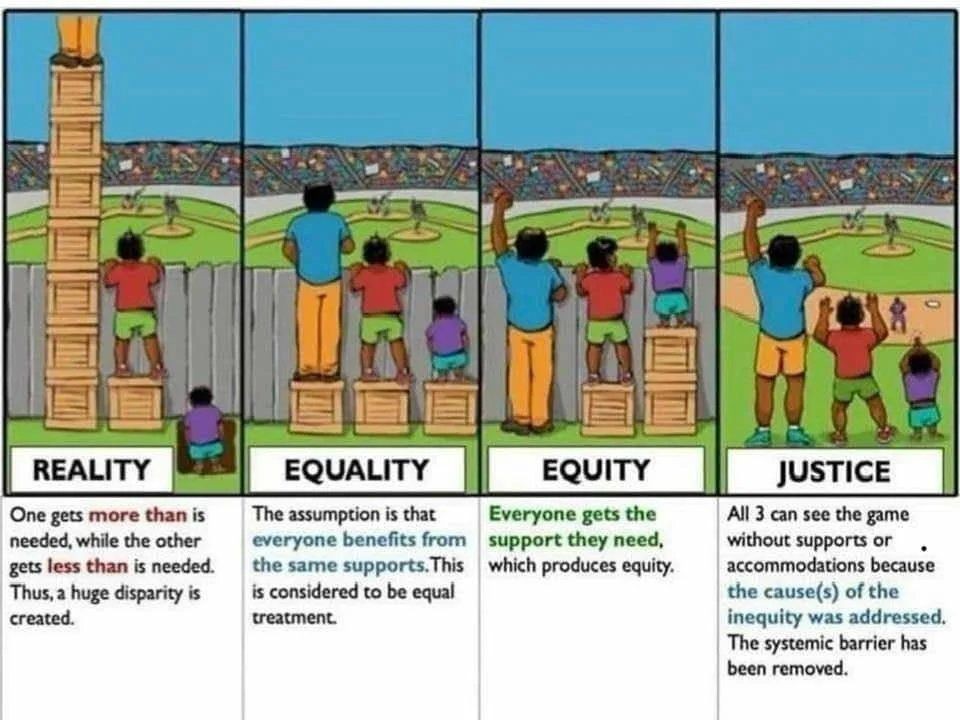

Even within the good and fair sub-heading you get the following 2 values: equality and fairness. This reminds me of that famous cartoon showing equality vs equity. I got this copy from here.

They are all positive

The last point I’ll make here is that all the outcomes are positively written to be positive. This isn’t a bad thing, but anyone in IT knows that if you design your systems based on “the best case”, you’ll end up paying for it in the future. If you read the document, you’ll see that they address this concern by stating that these principles should be continuously evaluated. Once a system is in production though, changing it (or removing it) becomes very very difficult, particularly as other things/services/features/whatever are built on top of this technology. This video captures it perfectly … at least from an IT perspective. None of the principles listed here centered around designing the technology to be able to react to bad outcomes, or to be flexible enough to be changed once deployed. The accountable and responsible section is the one that comes closest to this, stipulating that people should have a method to redress should be designed. At the speed that our world moves at, I’m skeptical that proper/fair redress mechanisms can actually be designed given current constraints.

Conclusion

I don’t think stating principles are a bad idea, I just think that the way we are doing it isn’t sufficient enough. We need to change the landscape that AI is playing in, not necessarily AI itself. Trying to hold just this technology to a different standard seems like a bad way to go, and seems like it won’t outlast the technology it is governing. Social media platforms were always about selling advertising, but they didn’t “stipulate” that in a written document ahead of time and tried to defend the public good of that outcome. They focused on the “keeping us connected” part of the story and spun the rest. I see more of the same happening here with AI.