In the book I am a strange loop, Douglas Hofstadter introduced an interesting concept of trying to measure the souledness of various thing on earth. In it, he comes up with an artificial scale of souledness and makes up a measuring unit called a huneker. What was also arbitrary was what actually constitutes a huneker, and how you would scale (or acquire, or evolve) hunekers over time. He postulates that a human baby would start at 1 huneker, and eventually, over time as it grows, get to 100 hunekers.

I found this concept interesting, and found my mind wandering as I was reading to try and think about what such a scale would look like for measuring the cyber-security maturity of an organization. I guess the first question to explore is how do we measure maturity currently, and what could a change in approach potentially yield for us.

A Detour to the C2M2

I took a quick scan of the Cybersecurity Capability Maturity Model to get a better understanding of both the process for measuring maturity, and what is actually measured itself.

As you would expect, the process itself is quite simple. Performing a self-assessment, select a maturity level (1 to 3) for each of the sections under test. Each section provides a descriptor of what is under test, and what is “true” of a capability at a certain maturity. For example, in the first section asset, change, and configuration management the first objective and practice is to manage IT and OT asset inventory. You could self identify at level 3 if you have a full asset inventory, and it is periodically updated according to defined triggers. Cool cool.

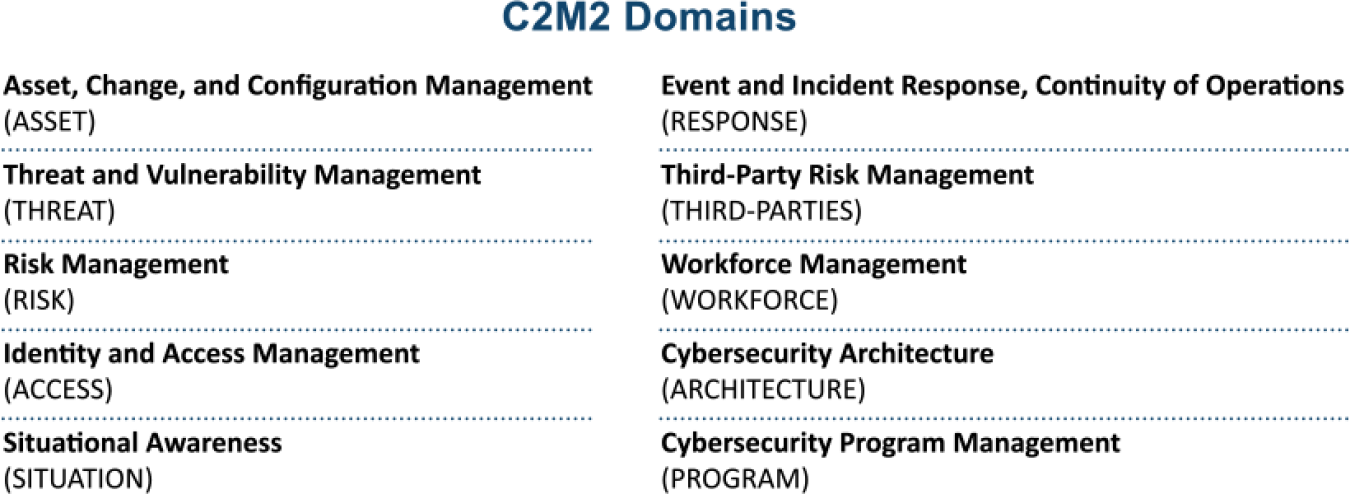

The C2M2 Domains look something like this:

Let’s take a look at some other domains. Situational Awareness had promise for me, but ended up being extremely narrowly focused. As per the doc:

“Situational Awareness involves developing near-real-time knowledge of a dynamic operating environment”

Reading more, it effectively believes situational awareness is focused solely on an internal view of components you have and ensuring you have visibility of and accountability for those systems. Nothing about the context you might find yourself in, and how that might be changing (for example, we are in m&a talks, maybe need to heighten security), or what might be going on within industry.

Another one that caught my attention was workforce management. It asserts the same old tired controls should be in place to secure organizations. This includes security awareness training, and the development of a specific cybersecurity workforce that is also routinely trained. From a reporting perspective, they talk about how the “effectiveness” of the activities should be measured, but don’t really talk about how you would actually go about doing that. Further, while they do mention that organizations should have proper policies/procedures, they don’t talk about any other context/landscape changes you could potentially make to your organization to increase security.

Ultimately, most maturity models follow the same bottom-up and top-down split in terms of measurement. They focus in on things that can be empirically measured (because you can only manage what you measure, or something like that). This approach can lead to a ton of waste (a full asset inventory is only useful if the asset inventory helps with your recovery process) and don’t really seem to make up or cover everything that makes up an organization (I would expect a mature organization to assign appropriate budgets for cyber security, for example).

Back to our model

Let’s suggest for a second that the maturity of the program within an organization would consist of elements that you could measure directly (let’s say, number of cyber security professionals you had) along with elements you couldn’t quite measure (for example, culture). For sake of argument, let’s come up with a unit to measure our cyber security maturity, a securian.

In order to provide some context, let’s assume for a second that most organizations are somewhere between 1 and 10 securians on the scale. They all do some security stuff to varying degrees, and there is varying motivation for that to be done (forced via regulatory compliance or willingly because they aren’t run by lawyers). Let’s say that the bottom of the scale is 0 which would represent an organization doing “nothing extra” in terms of security (ie: always accepting the defaults). For the sake of our thought experiment let’s assume that there is no way to have a negative securian score, although I’d imagine some companies (equifax?) probably operate in willful disobedience of IT norms.

Okay, so, how would we describe the progression up the scale in securians. For me, it makes sense to understand this progression in terms of optimization. Optimization as a measure can be applied to both the tangible (tools, controls, people) as well as the intangible (intelligence, leadership, culture, etc). You would combine the various levels of optimization to determine your securian score. Lastly, the scale wouldn’t have a “top level” or anything like that. The goal of any organization would be to achieve the most amount of securians it thinks it wants to have! This is similar to how you would act in your own life! Have your own opinions stop looking to the man (NIST, I’m looking at you) to tell you what you should be doing. Not having enough securians will (okay… should) obviously open you up to bad things (ideally like jail time… again… looking at you equifax).

So, how would I define optimization?

I think the first aspect of optimization is “just-in-time-ness” (yes! I got another made up word!). Ultimately, I want to spend effort on security only when I need it. This is almost like, in the cloud world anyways, matching demand curves for requests with supply of compute. The utopia is that the demand curve exactly matches the supply curve.

So what does “just-in-time-ness” get me? Well, it allows me (or my organization in this case) to be flexible in it’s approach to security for any given context. It allows me to continue innovating (maybe in a safe space, or with wide guardrails) and focus the controls as the solution starts to be applied in production contexts. It is also a measure of the use of automation to apply controls. For example, security controls can sometimes act as a constraint (that you want) within an environment. Having the tooling to correctly enforce the right controls allows the development of new solutions to have to design “for production” just helping them fail fast when a solution won’t work “in the real world”.

The next for me would be the “context-awareness” of the program. This would be a measure of the types of solutions produced by the organization, and how fit-for-purpose they are to the problem/context space at hand. I would put an organization who took a context-specific view of cyber security for one of their functions (I know, I’m throwing undefined terms around like crazy) and made appropriate decisions as having more securians than a company who just applied generic security advice. It would also be a measure of how “embedded” the security function is within the organization. For example, I would value one where security decisions are made close to problems (within teams) rather than by central teams and pushed out.

The last would be “adaptability”. This would effectively be a measure of the programs ability to recombine (or components of it to recombine) to meet new threats. It is beyond “just add AI” to cyber security solutions, but to, in real-time, understand impact of emerging threats. I mean, what I’m saying here is probably all impossible anyways, so why not shoot for the stars!

Why not effectiveness?

If you got this far, you might have pondered why my definition of optimization doesn’t include a element related to effectiveness. You could argue that it might be captured within “just-in-time-ness”, but I’d say it isn’t. Effectiveness is a snapshot measure in time about how effective a current control is (against current threats). Buying a toolset that is “good for today” doesn’t mean that it will be good for tomorrow. This is why I chose to include adaptability instead. I care more about how products can move/change/combine to solve security problems rather than they are effective “right-now”. The measure is more about the mean-time-to-effectiveness (when the landscape / context / threats change) that it is a snapshot measure.

Conclusion

Who knows? Current maturity models give a false impression of “doing the right things” because they are based on looking at what successful organizations are doing empirically, or, worse yet, trying to guess what they should have done to make a incident outcomes better. While useful, I don’t think it tells the whole story about the cyber security maturity of an organization. Mine isn’t complete, and probably would be very hard to “measure”, but it might at least gives decision makers a better idea of what their organization needs to look like to actually be mature in this space.