Over the break I had a chance to take a read through the Partnership On AI - Shared Prosperity Guidelines and it was a pretty interesting read. Responsible AI has been a hot topic for me, and one that I’ve spend some time looking at. Recently I worked with the Open Alliance for Cloud Adoption on developing their paper on Responsible AI.

Overall, I found this to be an interesting document. It tries to cast a wide net and put into perspective the opportunities and risks associated with AI development. This attempt to “quantify” the effect of AI is interesting, and I really like the idea of risk signals that are used in the document. Of course, it is likely that these risk signals are valid more for “technology” in general rather than just AI. This concept could likely be extended to helping businesses determine the ethics of all their decisions.

“Benefits will accrue to the workers with the highest control over how AI shows up in their jobs. Harms will occur for workers with minimal agency over workplace AI deployment”

The above quote caught my eye. While it is hard to disagree with the premise of the quote, I think that it takes a pretty rosy picture of what is to come. I’m not sure which workers, if any, will actually have agency to control how AI will show up in their workplace. I would argue that most workers have little agency in the tooling and technologies used currently within their day-to-day work, and AI won’t change this paradigm. Simply put, the goals of those in charge differ from the goals of those doing the work.

“We consider an AI system to be serving to advance the prosperity of a given group if it boosts the demand for labour of that group”

This was another interesting quote. The paper is very focused on labour as that is currently (in our capitalist system anyways) the only way to achieve prosperity for most people. The paper explicitly excludes other “mechanisms” for balancing of prosperity, such as universal basic income, and so has to focus solely on means to work for pay. I would honestly love if an AI could do my job and I would still get paid for it, but this is unlikely to happen.

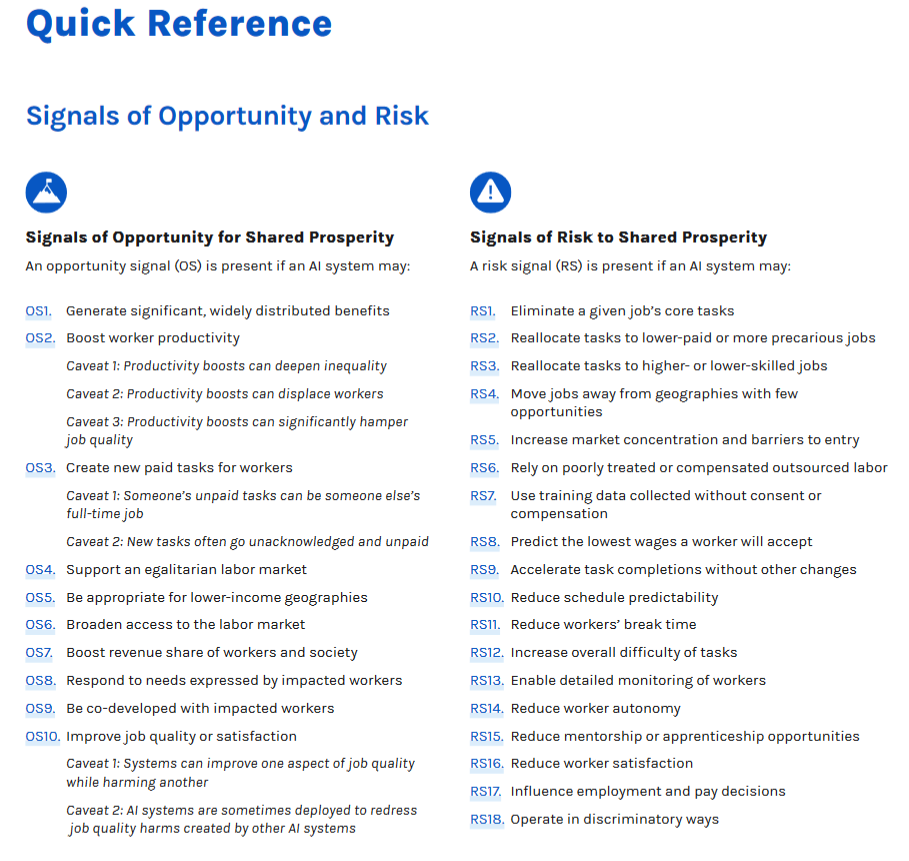

Here is a quick reference to the PAI signals.

Initially when I read the list, I found the risk signals to be particularly interesting. There are various businesses in the world whose sole purpose is to execute on some of these risks. While the document specifically calls out that profit is important, it does try to strike a balance that there are other areas that should be considered. Here is another fun quote from the text.

“Profit-generating activities do not necessarily have to harm workers and communities, but some of them do”.

No kidding.

Some other random observations.

OS2: Boost Worker Productivity

In this opportunity, they make the claim that

“A more productive worker is more valuable to their employer and ….. is expected to be paid more”

Again, I think this is quite a rosy picture of how corporations will act. I find it hard to believe that the MBAs and lawyers at the top of organizations will attribute productivity from the AI systems to workers, and therefore, pay them more. Particularly when there will be a cost associated with building, training, and running that AI. I see it more like this. Typically, a worker producing something can be seen in simple terms like this.

pie title How MBAs view workers

"Person Cost": 80

"Training Cost": 20

The first thing I see happening is that the training cost will change from training the person to training the AI, and this will come directly from the benefits afforded the workers. There are lots of market conditions which make this a smart move. Most training is done in an adult-education unaware fashion (think the ITIL training you’ve been to) and ultimately yields varying results across the worker base. Training the AI, however, will yeild predictable outcomes (even if those outcomes start off not very good) and have the potential to improve over time. So, it’ll likely end up something like this.

pie title How MBAs view workers, with AI

"Person Cost": 80

"Training Cost": 10

"AI Cost": 10

The next logical move here is to, instead of pulling the AI cost from the training budget, pull it from the person cost budget. The argument will be made that the worker is only that productive because of the AI, and therefore, should actually be paid less (since the AI cost isn’t something the worker pays for).

RS1: Eliminate a given job’s core tasks

Another quote:

“… those tasks should be weighted by their importance for the production of the final output. We consider task elimination above 10% significant enough to warrant attention”

I honestly wonder what the process would be here to actually understand and quantify this? In linear systems, such as manufacturing, there might be a direct causal link between a task performed and some sort of output. In knowledge and/or creative work, there likely isn’t?

RS5: Increase market concentration and barriers to entry

Isn’t this the goal of literally every business anywhere on the planet? From an AI specific standpoint, this is likely the goal of every “narrow” AI function hidden behind APIs or other subscription mechanisms. As companies aggregate data from their customers, their models become better trained, and that value is provided back to their customers only if they pay the vig. I think this is one reason why I was drawn so much to the Gaia-X is the idea that users should be in control of their data and be able to use any model/service they wish securely without vendor lock-in.

Conclusion

I think there is a lot to consider on the topic of Responsible AI, and documents like this offer a chance to have thought-provoking discussion around the central issues. I like that this is industry lead, and I think the next need here is some sort of mechanism to evaluate AI use-cases based on the signals presented in the document. Maybe we need to follow the CSA Star example and create a registry where companies can self-attest to their AI use cases. This type of transparency would be a good first step.